Job control for parallel and remote execution

The following topics are discussed:

Installing job control files

MPI configuration

Users have the option to execute Phoenix NLME jobs remotely using NLME Job Control System (JCS) or Phoenix JMS. Below is a comparison of the two options:

-

Remote Windows submission – JMS only

-

Remote Linux submission – JCS only

-

Disconnect/reconnect/stop – JCS and JMS

-

Progress reporting – JCS and JMS

-

Remote software required

JMS – Full Phoenix installation

JCS –

•GCC

•R (batchtools, XML, reshape, Certara.NLME8)

•ssh

•MPI (Open MPI for Linux platforms, MPICH for Windows)

-

Parallelization method

JMS – Local MPI

JCS –

•MPI for within job parallelization

•Linux Grid (TORQUE, SGE, LSF)* or MultiCore for between job parallelization

*TORQUE = Terascale Open source Resource and QUEue Manager, SGE = Sun Grid Engine, LSF = Platform Load Sharing Facility.

This section focuses on the Job Control Setup, for information on JMS, refer to “Job Management System (JMS)”. Phoenix NLME jobs can be executed on a number of different platform setups, enabling the program to take full advantage of available computing resources. All of the run modes can be executed locally as well as remotely.

One NLME job can be executed using:

-

Single core on the local host

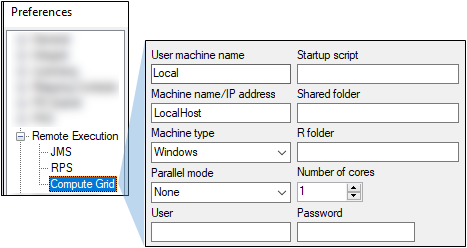

The default configuration profile is as follows:

-

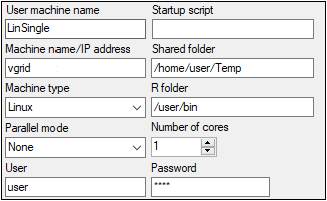

Single core on a remote host

An example of the configuration profile is as follows:

-

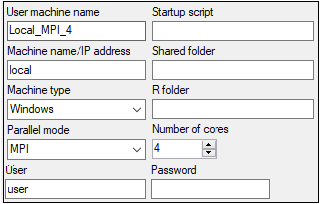

Using By Subject (first order engines, IT2S-EM)/By Sample (QRPEM) MPI parallelization on the local host

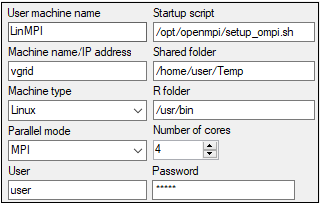

Subject/Sample method of MPI parallelization is chosen automatically based on the engine chosen. MPI software is required and, for remote host, R and Certara.NLME8 packages should be installed.

Each model and dataset are unique and the analyst needs to explore the best solution for the current project. However, there are some general guidelines that can be applied to the majority of projects. The speed of model execution is generally based on the number of computational cores, the speed of those computational cores, and the speed of writing to disk. In general, it is thought that an increase in number of cores will result in a decrease in computation time. Thus, parallelizing by 8 MPI threads will be 2x faster than 4 threads. This is true, but the relationship is not linear, due to existing overhead of some unparalleled segments and the overhead of collecting results from different threads.

•For Windows platforms, the default profile with MPI parallelization is as follows:

•For Linux remote runs, an example profile for MPI parallelization is as follows:

Execution can be parallelized by job level for the following run modes:

Simple (Sorted datasets)

Scenarios

Bootstrap

Stepwise Covariate search

Shotgun Covariate search

Profile

The implemented methods of “by job” parallelization are:

-

Multicore: multiple jobs are executed in parallel on the local or remote host

R and Certara.NLME8 package should be installed on the chosen host. Note that the Multicore method can not be used within MPI (by Subject/by Sample MPI parallelization within each job).

The user can control the number of processes run in parallel (Number of cores field).

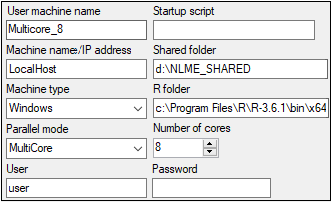

•An example of Windows local configuration is as follows.

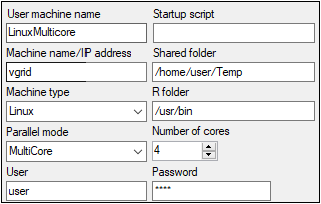

•An example of Linux remote configuration is as follows:

-

Submission to a supported remote Linux grid

Supported grids are SGE, LSF and TORQUE. The number of cores in the configuration for the grid means the number of nodes to be used.

Number of cores in a grid configuration is the number of cores to be used. Each host can be configured to have multiple cores and each core can handle a separate job.

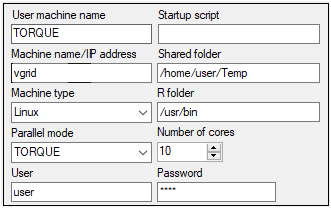

An example profile for submission to the TORQUE grid is as follows:

Caution:In some grid configurations, if the number of available cores specified for a grid exceeds the total number of available cores, it can cause the job to remain in the queue. If the job cannot be canceled from within Phoenix, then a direct cancellation through ssh is required. Care must be taken especially for burstable grids, where additional resources (slots) can be requested but not used. Periodic monitoring of the running jobs for the current user is recommended.

The NLME jobs submitted to the grid can be parallelized using MPI if the system has the appropriate MPI service installed and the Parallel mode is set to one of the three *_MPI options (LSF_MPI, SGE_MPI, or TORQUE_MPI (to parallelize the runs as by job as well as by Sample/Subject within each job).

For any of the *_MPI modes, the number of cores to be used for each job in parallelization will be calculated as the smallest of the following 2 numbers:

(1) the number of cores in the configuration divided by the number of jobs, or

(2) the number of unique subjects in a specific job divided by 3. If there is an uneven number of unique subjects in each replicate, the smallest number of subjects will be used for the calculation.

Example 1: There are 300 cores available, according to the configuration profile, 4 jobs requested (replicates), and 200 subjects in each replicate. Each of the 4 replicates would parallelize across 66 cores (300/4 = 75. 200/3 = 66. 66 < 75). Total cores used = 264.

Example 2: There are 100 cores available, according to configuration profile, 3 jobs requested (replicates), and 300 subjects in each replicate. Each of the 3 replicates would parallelize across 33 cores (100/3 = 33. 300/3 = 100. 33 < 100). Total cores used = 99.

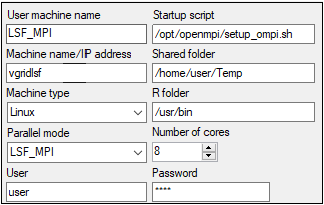

An example of the configuration profile is as follows:

Caution:For some grid configurations, the number of calculated MPI cores for the particular job cannot exceed the total number of hosts available on the grid. This can cause the software to ask for more hosts to do the computation than are available and result in the job freezing or exiting with an error. In such cases, it is advised to switch to the grid mode without MPI.

Additional software and libraries are required for certain platform setups.

For within a job parallelization on the local host, the MPICH2 1.4.1 software package is required and is installed during a complete installation of Phoenix or can be selected during a custom Phoenix installation. This application facilitates message-passing for distributed-memory applications used in parallel computing. (If needed, the mpich2-1.4.1p1-win-x86-64.msi file is located in <Phoenix_install_dir>\Redistributables). MPICH2 needs to be installed on all Windows machines included in the MPI-ring or used to submit a job to the MPI-ring. If you have another MPICH service running, you must disable it. Currently, MPI is only supported on Windows.

For parallel processing on a remote host or for using multicore parallelization locally, the following must be installed:

-

R 3.3 (or later) can be obtained from http://cran.r-project.org.

-

R package ‘batchtools’ handles submission of NLME replicates to a Linux/Windows GRID.

-

R package ‘reshape’ is used for summarizing the NLME spreadsheet.

-

R package ‘XML’ is used to manage reading/writing progress.xml.

-

Certara.NLME8_1.0.1.tar.gz is the R package for NLME distributed with Phoenix, located in <Phoenix_install_dir>\application\lib\NLME\Executables. From the R user interface, type the following command:

install.packages(“Certara.NLME8_1.0.1.tar.gz”,repos=NULL,type=”source”)

If the current version of the package is intended to be the default version along the grid, install it with elevated privileges.

-

SSH/Sftp needs to be installed on all remote hosts that will be used with the MultiCore parallelization method or act as a submission host to a GRID. SSH is installed by default on Red Hat Enterprise Linux (RHEL) version 7.x. To facilitate job submission from Windows to Linux, NLME in Phoenix (on Windows) contains an SSH library.

-

For grid execution (SGE/LSF/TORQUE), installation of Openmpi (v 1.10.6) as a parallel platform on the grid is recommended. Please refer to https://www.open-mpi.org/faq/ for information. Standard grids have Openmpi installed by default.

MPI configuration is done by the Phoenix installer at the time of installation.

-

To check MPI operation, see if there is an SMPD service running on Windows by entering the following into a command shell:

smpd –status -

If it is reported that no SMPD is running, enter the following command:

smpd –install -

Running smpd –status again should report that SMPD is running on the local Windows host.

Note:If one of the MPI processes crashes, other MPI processes and the parent process mpiexec.exe may be left running, putting Phoenix NLME in an unpredictable state. Use the Task Manager to check if mpiexec.exe is still running and stop it, if it is. This will stop any other MPI processes.

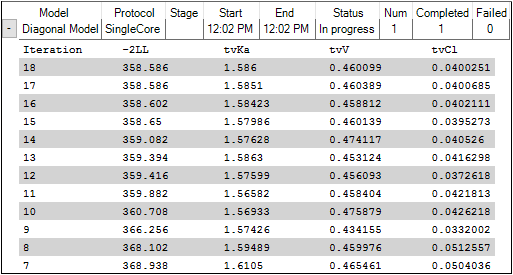

The NLME Job Status window is automatically displayed when a Phoenix Model object is executed or by selecting Window > View NLME Jobs from the menu.

The window provides easy monitoring of all NLME jobs that are executing as well as a history of jobs that have completed during the current Phoenix session.

During local execution of a Phoenix model, the status window displays the parameter values and gradients of each major iteration of the algorithm, with the most recent iteration at the top. The model name, protocol, stage, time that the execution started and stopped, the status of the job, number of runs, and the number of runs that completed or failed are listed at the top. (See “Job control for parallel and remote execution” for more execution options.)

The job can be canceled (no results are saved) or stopped early (results obtained up to the time when the job is ended are saved) by right-clicking Status menu. Specifically, if the Stop early button is clicked during an iteration, the run is ended once the current iteration is completed. If the Stop early button is pushed during the standard error calculation step, then Phoenix stops the run and prepares outputs without standard error results.

When Stop Early is executed, the Overall results worksheet will show the Return Code of 6, indicating that the fitting was not allowed to run to convergence.

Next to the name of the model that is executed in a run, several pieces of information are shown, providing a quick summary of the job’s current state. The Status of a job can be In Progress, Finished, or Canceled.

If a job involves multiple iterations, information for each completed iteration is added as a row. Use the button at the beginning of the job row to expand/collapse the iteration rows.

Right-clicking a row displays a menu from which the job can be stopped early (the results up to the last completed iteration are saved) or canceled (no results are saved).