The Phoenix Validation Suite focuses on numerical testing of Phoenix’s analytical tools to verify the accuracy of software computations on the processors in your environment. The test cases demonstrate that Phoenix computational engines perform as intended in your environment. Each test case uses Phoenix to execute the operational objects from an input project in your environment. The output worksheets from the executed project are exported from Phoenix as CSV files with column headers and column units if used. The exported CSV files are referred to as the ‘run’ files from your environment. Your run files are then compared to a set of CSV files that have verified results. The CSV files with the verified results are referred to as the ‘reference’ files. The comparison is done by computing the differences in the numeric results and by testing for differences in text results.

Reference files

The reference files have been verified by comparing either to computations in other products or to published examples: NONMEM, Microsoft Excel, SAS code, SAS procedures, S-PLUS code, results given in textbook examples or journal references, and examples and results supplied by the National Institute for Standards and Technology (NIST). The methods for verification of each test case are explained in the document included with the Validation Suite titled “Computational Engines Verification Report for Phoenix WinNonlin x.x.pdf,” where “x.x” is the version number.

“Passed” status

The test case will have a status of Passed when the following conditions are true:

Number of lines in the ‘run’ and ‘reference’ files are equal,

Text values all match exactly between the ‘run’ and ‘reference’ files, and

Numerical differences between the ‘run’ and ‘reference’ files are within the acceptable tolerance (described below).

Due to limitations in computer accuracy (which is 14 to 15 significant digits for double-precision arithmetic), when computing the differences of ‘run’ and ‘reference’ numbers, the ‘run’ and ‘reference’ numbers are each rounded to 14 significant digits prior to taking the difference. This is so that ‘difference’ file does not display differences smaller than 1e–14 which are beyond computer accuracy and so are meaningless.

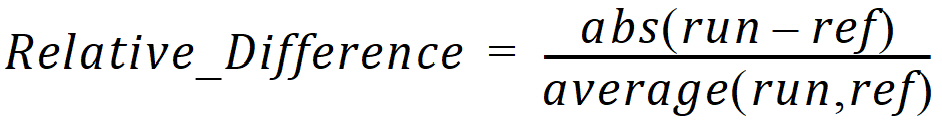

In addition, when comparing numerical results in ‘run’ files to ‘reference’ files, the Validation Suite uses an acceptable numerical tolerance when determining whether a test case passes or fails, so as to not fail test cases for insignificant differences. The use of the acceptable tolerance is relative to the magnitude of the result being validated, not an absolute numerical tolerance. For validation of WinNonlin, the acceptable tolerance is 1e–6, and the computation is:

where 'run' is the value from the ‘run’ file and 'ref' is the value from the ‘reference’ file. Therefore, the ‘difference’ file may display differences <= 1e–6 for a test case with a Passed status.