The SAS Script object imports SAS scripts and datasets for use in Phoenix.

Note:Phoenix program plugins, such as the SAS Script object, assume that the corresponding third party software is installed and running properly.

Use one of the following to add a SAS Script object to a Workflow:

Right-click menu for a Workflow object: New > SAS > SAS Script.

Main menu: Insert > SAS > SAS Script.

Right-click menu for a worksheet: Send To > SAS > SAS Script.

Note:To view the object in its own window, select it in the Object Browser and double-click it or press ENTER. All instructions for setting up and execution are the same whether the object is viewed in its own window or in Phoenix view.

Additional information is available on the following topics:

•Importing/Writing the code to access the external program

•Executing a third party tool object

Global preferences are available to the user. Some third party tool objects require certain global settings to be specified prior to use. There are optional settings to enable editing of scripts in external programs from within Phoenix. Some optional preferences can streamline the workflow by automatically filling in or setting options in the object’s UI with information entered by the user in the Preferences dialog.

•Set up access to the external program

•Specify a development environment

•Define a default working directory and output location

Set up access to the external program

-

Select Edit > Preferences.

-

In the Preferences dialog, select the object in the menu tree.

-

Enter the directory path to the executable in the first field or click Browse to locate and select the directory.

-

Click Test to make sure the correct file is selected.

Note:If JPS is run as a Windows Service, installation of SAS must be local to the JPS.

If JPS is run as a Console application, the path to the SAS executable may be a Networked location not local to the JPS.

Specify a development environment

There is a Development Environment section available on the preference pages. When the development environment is defined in this section, users can access that environment from the third party tool object’s Options tab with the Start button. This allows users to edit scripts in other specified environments, and then pull the updated scripts back into Phoenix, at which time, the script can be executed from Phoenix and the output processed through the normal Phoenix workflow.

-

In the Command field, enter the directory path to the executable or click Browse to locate and select the file.

-

In the Arguments field, enter any arguments/keywords to use when starting up the environment.

Note:Proper development environment setup for Tinn-R requires that $WorkDir\$File be entered in the Arguments field.

Define a default working directory and output location

Setting the output folder in the Preferences dialog eliminates the need to enter the information in the Options tab for each run. The fields will automatically be filled with the paths entered here.

-

Enter the directory path in the Default Output Folder field or use the Browse button.

Note:If the user specifies a default output location, the user must ensure that they have write access.

-

Turn on the Create a unique subfolder for each run checkbox to generate and then store the output from each run in a new subfolder of the output folder.

The Setup tab allows mapping of datasets and script files to the object. The Mappings panel(s) of the Setup tab displays the column headers specified in the script file. The Text panel, as shown in the previous image, allows users to map a script or control/model file to the object and view the contents.

Context associations change depending on the column headers, or input, defined in the script/control file. Not all SAS scripts define or use mapping contexts. If the /*WNL_IN */ statement does not define any mapping contexts, then there are no mapping options available in the Mappings panel.

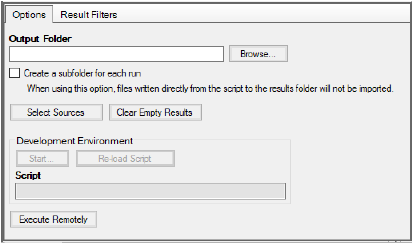

The Options tab is used to define a location for the output, access development environment, and start a remove execution.

Entering an output location is optional. If a directory is not specified then Phoenix places output in a temporary folder that is deleted after Phoenix is closed. This may be preferred when sharing third party tool projects with other users, as the output folder is machine specific and may not exist on other machines. If users want to save the results from a third party tool object run to a disk, then they must either specify an output folder or manually export each result to disk.

The results folder set in the Options tab must match the export folder location set in the script. To make sharing projects involving SAS Script objects easier, set the results folder in the Options tab to the getwd value and refer to getwd in the code. Files written to the path returned from getwd end up in the output folder and in the results.

-

Enter the output folder path in the field or click Browse to specify the output folder location.

-

Turn on the Create a subfolder for each run checkbox to add a new results folder in the specified output location each time the object is executed.

This option prevents the output from being overwritten with each run, especially if the default output folder option, under Edit > Preferences, is being used. -

Click Select Sources to include additional files along with the mapped input.

-

Click the Clear Empty Results to remove any results published by the object that are empty.

-

To start the development environment, click Start in the Development Environment section of the Options tab.

The development environment as configured in the Preferences dialog is started. (See “Specify a development environment”.) -

When script work is completed, click Reload Script to bring the modified script into Phoenix for use in the next execution of the object.

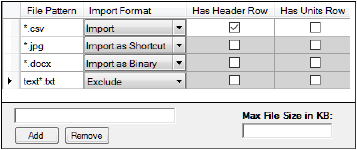

Allows specification of how different results files from the external program should be imported. Phoenix first looks for the first rule to match a file and uses that rule to import the object. The order of the rule matching is: Always Import (using normal import methods), Max File Size (will create a shortcut), Shortcut Files, Binary Files (using normal import methods). The user creates filter criteria based on filename patterns. If a maximum file size is specified, any file larger than the entered value will always be imported as a shortcut (unless the file matches filter criteria in the Always Import list).

Importing/Writing the code to access the external program

The SAS Script object must have code that will allow it to initiate the external program and submit jobs. The code can be stored as a script file, imported into the project, and mapped to the object, or the code can be directly entered into the object.

•Import and map file containing code

Import and map file containing code

-

Select a project or workflow in the Object Browser and then select a third party tool object from the Insert menu.

-

Select the File > Import menu option (or use one of the File > Custom Import options).

-

Navigate to and select the file(s) to import in the Import File(s) dialog.

Imported scripts or control/model files are added to the Code folder in the Object Browser. -

Use the pointer to select and drag a dataset from the Data folder to the object’s data mappings panel.

The dataset is mapped to the object’s Mappings panel and the dataset name is displayed beside the primary data input in the Setup tab list. -

Click the option buttons in the Mappings panel to map/unmap a data type in the dataset to the desired context. Contexts that must have data mapped to them so the object can perform its function are shaded orange.

Instead of importing and mapping a script/control file to use the third party tool object, users can write their own code or copy and paste code from another source. (For more information on the text editor, see http://www.syncfusion.com/products/user-interface-edition/windows-forms/edit/overview.

-

To enter the code manually, check the Use internal Text Object checkbox.

If a script/control file is already mapped, a message is displayed that asks if the mapped file should be copied to the internal text editor.

•Click Yes to allow editing of the currently imported file.

•Click No to remove the imported control file.

-

Type the code directly in the field.

-

When entry or modifications are complete, click Apply.

Some important things to consider when entering code in the Text panel versus importing and mapping a script/control file are:

Apply must be clicked for the code entered or modified to be accepted.

Changes made in the Text panel are not applied to the file on the disk.

The script can be modified either in the Code folder or in the object itself and these are kept in sync.

Once the user switches to Use Internal Text, any subsequent changes are not kept in sync with the script in the Code folder.

Changes made to the special comment (/*WNL_IN */) statement are reflected in the data Mappings panel. Changes made to a mapped script do not change the script in the Code folder.

The input can be defined within the script as a shortcut by adding the following to the script.

*PHX_SHORTCUT(Data);

The text entered between the parentheses is used as the variable’s name (e.g., Data).

&let Data=”C:\\Path\\To\\File”;

In the script, the user can use the variable assigned the path to read or manipulate the file.

To add a shortcut to a script

-

With the object inserted in the project, click the SAS Script in the Setup list.

-

Either type the script directly in the field or click

and select the script from the Code folder.

and select the script from the Code folder. -

Type the following as the first line in the script:

*PHX_SHORTCUT(Data);

-

Click Apply.

-

Select the new Data item in the list.

The Data panel shows the full path behind the shortcut.

&let Data=”C:\\Path\\To\\File”;

The log file produced from executing the object contains the definition used for the shortcut.

The Result Filters tab allows users to define filters that determine whether files of a certain name or size are returned to Phoenix as a shortcut, a binary file, a complete copy, or returned at all.

-

Type the file pattern to serve as a filter into the field near the bottom of the tab and click Add. (Enter full file names or use the wildcard “*”, e.g., *.txt.)

-

To remove a pattern from the list, select it and click Remove.

-

For each pattern, select how files matching the pattern are to be handled:

Import: Default file handling for imported data of that file type is used to import the file

Import as Shortcut: The file itself is not imported, however, a shortcut is created that points to the complete file

Import as Binary: The file is imported as a binary object, with no conversion to any specific Phoenix type

Exclude: The file is not imported

Should a file match several specified patterns, the precedence of processing is: Exclude, Import, Shortcuts, Binary.

-

For .csv and .dat output files, check the Has Header Row box to indicate that files matching the file pattern have a header row.

-

For .csv and .dat output files, select the Has Units Row option to indicate that files matching the file pattern have a units row.

-

Enter maximum size that an imported complete file can be in the Max File Size in KB field.

Any file that exceeds this size is imported as a shortcut (unless the file matches a File Pattern whose Import Format is set to Import).

Executing a third party tool object

Click  or to run the job remotely (available for all third party tool objects except the SigmaPlot object), click Execute Remotely in the Options tab.

or to run the job remotely (available for all third party tool objects except the SigmaPlot object), click Execute Remotely in the Options tab.

Executing a third party tool object remotely sends the job to the server that is defined in the Preferences dialog (Edit > Preferences > Remote Execution). See “Phoenix Configuration” for instructions. The project is saved automatically.

The project must have been saved at least once prior to executing on RPS, otherwise execution will not pass validation and a validation message will be generated.

At this point, the step being executed on RPS, along with any dependent objects, has been locked. It is now safe to close the project or to continue working in Phoenix.

In Phoenix, some objects in a workflow allow the user to click and execute the last object in a chain and it will re-run any necessary objects earlier in the workflow. This is not true for the R object. Either the workflow or the individual objects must be executed to obtain the current source data for the R object.

Phoenix only supports using SAS Version 5 transport files (*.xpt) in order to export datasets from Phoenix to SAS. The SAS transport file names must be specified in the SAS script.

The output files are also defined in the SAS script, so only common output files that Phoenix always generates are listed here.

All output files defined in the script are placed in the output folder specified in the Options tab. If an output folder is not specified, the file are placed in a temporary directory in C:\Users\<user name>\AppData\Local\Temp, depending on the operating system. The directory is deleted when Phoenix is closed.

Log File: Text file that contains a list of the functions SAS performs based on the script.

Settings: Text file that contains third party tool object settings internal to Phoenix.