Job control for parallel and remote execution

The following topics are discussed:

Users have the option to execute Phoenix NLME jobs remotely using NLME Job Control System or Phoenix JMS. Below is a comparison of the two options:

|

|

NLME Job Control System |

Phoenix JMS |

|

Remote Windows submission |

No |

Yes |

|

Remote Linux submission |

Yes |

No |

|

Disconnect/reconnect/stop |

Yes |

Yes |

|

Progress reporting |

Yes |

Yes |

|

Remote software required |

•GCC |

Full Phoenix installation |

|

•R (batchtools, XML, reshape, Rmpi, Certara.NLME8) |

||

|

Parallelization method |

•Local MPI |

Local MPI |

|

•MPI Cluster |

||

|

•Linux Grid |

||

|

•MultiCore |

||

|

•LSF |

Caution:The number of available cores specified for a grid cannot exceed the total number of cores for which the grid is configured. This can cause the software to ask for more cores to do the computation than are available and result in the job remaining in the queue.

This section focuses on the Job Control Setup, for information on JMS, refer to “Job Management System (JMS)”. Phoenix NLME jobs can be executed on a number of different platform setups, enabling the program to take full advantage of available computing resources:

•Single core on local host

•By Subject MPI parallelization on local host

•By Sample multiple core parallelization on local or MPI cluster host

•By Sample MPI parallelization on local host

•Remote Linux execution

•Remote Linux execution with by Sample multiple core parallelization

•Remote GRID parallel submission of supported scenarios

Additional software and libraries are required for certain platform setups.

-

For “By Subject” parallelization, the MPICH2 1.4.1 software package is required and is installed during Complete Installation of Phoenix or can be selected during “Custom Installation”. This application facilitates message-passing for distributed-memory applications used in parallel computing. (If needed, the mpich2-1.4.1p1-win-x86-64.msi file is located in <Phoenix_install_dir>\Redistributables). MPICH2 needs to be installed on all Windows machines included in the MPI-ring or used to submit a job to the MPI-ring. If you have another MPICH service running, you must disable it. Currently, MPI is only supported on Windows.

-

For “By Sample” parallel processing on a GRID, the following must be installed:

•R 3.2 (or earlier) can be obtained from http://cran.r-project.org.

•R batchtools (v.0.9.2) handles submission of NLME replicates to a Linux/Windows GRID. Install from the R user interface using the Packages >> Install Packages menu option.

•R reshape (v 0.8.6) is used for summarizing NLME spreadsheet. Install on all Linux machines either from the R user interface using the Packages >> Install Packages menu option.

•R XML (v 3.98) is used to manage reading/writing progress.xml Install from the R user interface using the Packages >> Install Packages menu option.

•Certara.NLME8_0.0.1.0000.tar.gz is the R package for NLME distributed with Phoenix, located in <Phoenix_install_dir>\application\lib\NLME\Executable. From the R user interface (as administrator), type the following command:

install.packages(“Certara.NLME8_0.0.1.0000.tar.gz”,repos=NULL,type=”source”).

•SSH/Sftp needs to be installed on all Linux machines that will be used with the MultiCore parallelization method or act as a submission host to a GRID. SSH is installed by default on Red Hat Enterprise Linux (RHEL) version 6.x. To facilitate job submission from Windows to Linux, NLME in Phoenix (on Windows) contains an SSH library.

•For grid execution (SGE/LSF/TORQUE), installation of Openmpi (v 1.10.6) as a parallel platform on the grid is recommended. Please refer to https://www.open-mpi.org/faq/ for information. Standard grids have Openmpi installed by default.

-

For “By Sample” parallel processing on a Windows MPI Cluster, the following must be installed:

•R 3.1.1 (or earlier). R can be obtained from http://cran.r-project.org.

•Rmpi (Rmpi_0.6-3.zip) provides support for executing NLME replicates in parallel on an MPI cluster. This file is distributed with Phoenix and is located in <Phoenix_install_dir>\Redistributables. Install on all Windows hosts by unzipping it into the following directory: <R_install_dir>\R\R-3.1.1\library.

•R reshape (v 0.8.6) is used for summarizing NLME spreadsheet. Install on all Linux machines either from the R user interface using the Packages >> Install Packages menu option.

•R XML (v 3.98) is used to manage reading/writing progress.xml Install from the R user interface using the Packages >> Install Packages menu option.

•Certara.NLME8_0.0.1.0000.tar.gz: R package for NLME distributed with Phoenix, located in <Phoenix_install_dir>\application\lib\NLME\Executable. From the R user interface (as administrator), type the following command:

install.packages(“Certara.NLME8_0.0.1.0000.tar.gz”,repos=NULL,type=”source”)

Note:R 3.1.1 was the last version of R which worked with Rmpi and Rmpi is no longer being updated. Users can upgrade to more recent versions of R and run any of the other Job Control modes, just not Remote MPI Clusters.

The R packages mentioned above are also available at https://cran.r-project.org/web/packages.

MPI configuration is done by the Phoenix installer at the time of installation.

-

To check MPI operation, see if there is an SMPD service running on Windows by entering the following into a command shell:

smpd –status

If it is reported that no SMPD is running, enter the following command:

smpd –install

Running smpd –status again should report that SMPD is running on the local Windows host. -

To register a user with account with MPI on the submission host enter the following into a command shell:

mpiexec –register

This will prompt for a username and password, and should report that the password is encrypted in the registry. Registering with MPI is only required on the submission host; however, the username and password must be the same on all machines in the MPI cluster. This step must be repeated every time a user changes their password.

Currently, NLME only supports Windows based MPI cluster. -

To define a Windows MPI-cluster, enter the hostname (or IP address) of each machine into the following command:

smpd –sethosts <hostname 1> <hostname 2> … <hostname n>

(You can learn a machine’s hostname by entering hostname into a command shell.) -

To run an MPI-cluster with a shared drive:

Define the cluster in the job control file. For example:

# Windows MPI cluster with 24 nodes

LocalHost|Windows|MPI| VM_MPI_Cluster | | M:\NLME_SHARED |C:\Program Files\R\R-3.1.1\bin\x64 | 24

Use the following command to set up the cluster:

smpd -sethost localhost:1 remoteMachine:24 -

To check the current configuration of the MPI-ring enter the following command:

smpd -hosts

Note:If one of the MPI processes should crash, other MPI processes and the parent process mpiexec.exe may be left running, putting Phoenix NLME in an unpredictable state. Use the Task Manager to check if mpiexec.exe is still running and stop it. This will stop any other MPI processes.

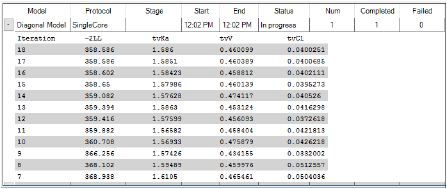

The NLME Job Status window is automatically displayed when a Phoenix Model object is executed or by selecting Window > View NLME Jobs from the menu. The window provides easy monitoring of all NLME jobs that are executing as well as a history of jobs that have completed during the current Phoenix session.

During local execution of a Phoenix Model, the status window displays the parameter values and gradients of each major iteration of the algorithm, with the most recent iteration at the top. The model name, protocol, stage, time that the execution started and stopped, the status of the job, number of runs, and the number of runs that completed or failed are listed at the top. (See “Job control for parallel and remote execution” for more execution options.)

The job can be canceled (no results are saved) or stopped early (results obtained up to the time when the job is ended are saved) by right-clicking the Status menu. Specifically, if the Stop early button is clicked during an iteration, the run is ended once the current iteration is completed. If the Stop early button is pushed during the standard error calculation step, then Phoenix stops the run and prepares outputs without standard error results.

When Stop Early is executed, the Overall results worksheet will show the Return Code of 6, indicating that the fitting was not allowed to run to convergence.

Next to the name of the model that is executed in a run, several pieces of information are shown providing a quick summary of the job’s current state. The Status of a job can be In Progress, Finished, or Canceled.

If a job involves multiple iterations, information for each completed iteration is added as a row. Use the button at the beginning of the job row to expand/collapse the iteration rows.

Right-clicking a row displays a menu from which the job can be stopped early (the results up to the last completed iteration are saved) or canceled (no results are saved).